CONTEXT EFFECTS AND TOP-DOWN FEEDBACK

Speech sounds vary depending on the characteristics of the talker's voice, coarticulation from adjacent speech sounds, and differences in speaking rate. As a result, speech perception is highly context-dependent. The broader linguistic context (e.g., which word to expect in a sentence) could influence speech perception as well. There is considerable debate over how listeners integrate context with information from the speech signal, including whether higher-level linguistic information feeds back down to influence early speech perception.

One way to address this debate is to study context effects in real time as the speech signal unfolds. For instance, listeners adjust their perception of temporal cues in speech, such as voice onset time (VOT), based on the speaking rate of the talker. Using the visual-world eye-tracking paradigm, we have demonstrated that listeners use preceding sentence rate to interpret VOT, but they do not continue waiting for subsequent rate information (indicated by the length of the following vowel).

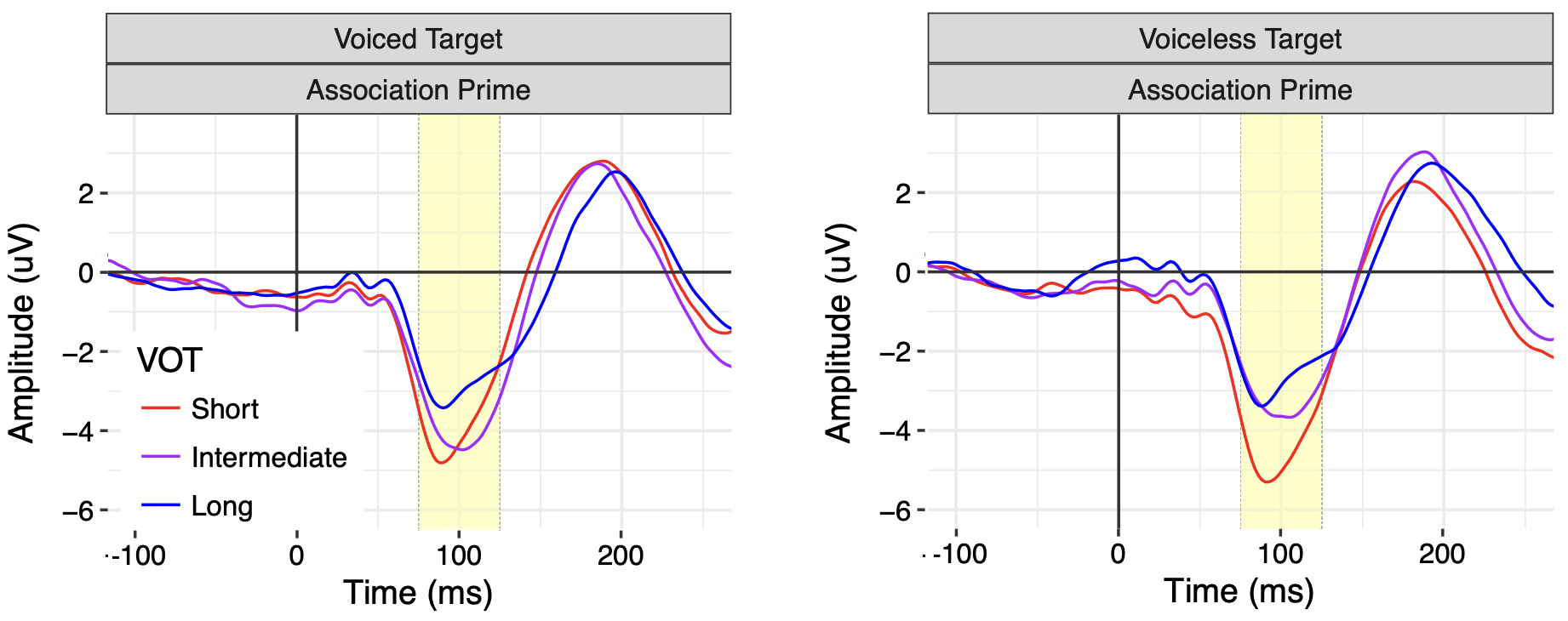

The N1 response, which tracks early perceptual encoding of speech sounds, varies based on semantic expectation (Getz & Toscano, 2019).

Together, these results shed light on how listeners perceive speech in context, demonstrating an important role for both acoustic context and top-down feedback from higher-level linguistic representations.

More information

- Getz, L.M., Toscano, J.C. (2021). The time-course of speech perception revealed by temporally-sensitive neural measures. WIREs Cognitive Science, 12, e1541.

- Getz L.M., Toscano J.C. (2019). Electrophysiological evidence for top-down lexical influences on early speech perception. Psychological Science, 30, 830-841.

- Toscano, J.C., & McMurray, B. (2015). The time-course of speaking rate compensation: Effects of sentential rate and vowel length on voicing judgments. Language, Cognition, and Neuroscience, 30, 529-543. [PubMed Central]

- Toscano, J.C., & McMurray, B. (2012). Cue-integration and context effects in speech: Evidence against speaking-rate normalization. Attention, Perception, and Psychophysics, 74, 1284-1301. [PubMed Central]